Fake it ‘til You Make Law: The AI Identity Crisis

- Introduction to Generative AI and Deepfakes

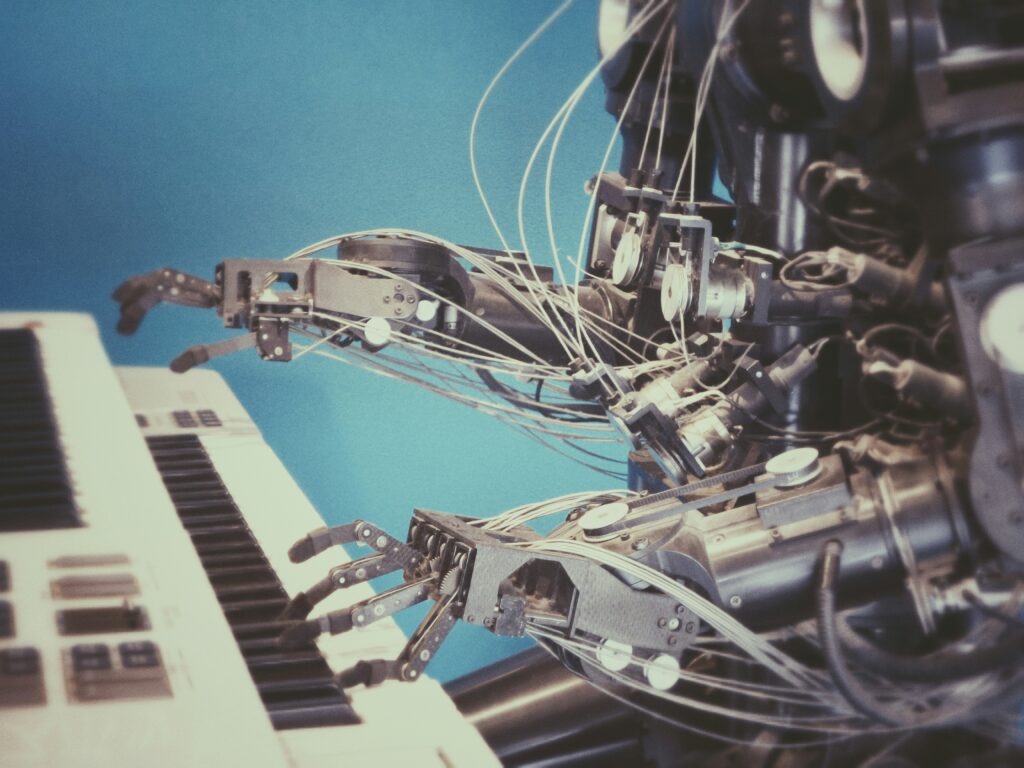

The advent of generative Artificial Intelligence (AI) and deepfake technology marks a new era in intellectual property law, presenting unprecedented challenges and opportunities. As these technologies evolve, their creations blur the lines between reality and fiction, escalating the risk of consumer deception and diluting brand values. Deepfakes, a fusion of the words ‘deep learning’ and ‘fake,’ stand at the forefront of this revolution. These ultra-realistic synthetic media replace a person’s image or voice with someone else’s likeness, typically using advanced artificial intelligence. Relying on deep learning algorithms, a subset of AI, deepfakes employ techniques like neural networks to analyze and replicate human facial and vocal characteristics with stunning accuracy. The result is a fabricated version of a video or audio clip that is virtually indistinguishable from the original.

- Broad Applications and Challenges of Deepfakes

The spectrum of deepfake applications is vast, encompassing both remarkable prospects and significant risks. On the positive side, this technology promises to revolutionize entertainment and education. It can breathe life into historical figures for educational purposes or enhance cinematic experiences with unparalleled special effects. However, this technology’s downside reveals consequences, particularly for businesses and brands. Generative AI and deepfakes possess the potential to create highly convincing synthetic media, obfuscating the line between authentic and fabricated content. This capability poses substantial risks for consumer deception and brand damage.

When consumers encounter deepfakes featuring well-known trademarks, it not only challenges the authenticity of media but also erodes the trust and loyalty brands have cultivated over the years. This impact on consumer perception and decision-making is central to understanding the full implications of AI on trademark integrity, as it leads to potential deception and undermines the critical connection between trademarks and consumer trust. This dual nature of deepfakes as both a tool for creative expression and a source of potential deception underscores the complexity of their impact on intellectual property and consumer relations.

- Trademark and Brand Identity in the Context of AI

As generative AI introduces opportunities on the one hand, risks abound regarding consumer deception. This direct threat to perception highlights trademarks’ growing vulnerability. At the heart of branding and commerce lies the trademarks, distinguishing one business from another. They are not just mere symbols; they represent the core identity of brands, extending from distinctive names and memorable phrases to captivating logos and designs. When consumers encounter these marks, they do not just see a name or a logo; they connect with a story, a set of values, and an assurance of quality. This profound connection underscores the pivotal role of trademarks in driving consumer decisions and fostering brand loyalty. The legal protection of these trademarks is governed by the Lanham Act, which offers nationwide protection against infringement and dilution. Infringement occurs when a mark similar to an existing trademark is used, potentially confusing consumers about the origin or sponsorship of a product. Dilution, on the other hand, refers to the weakening of a famous mark’s distinctiveness, either by blurring its meaning or tarnishing it through offensive use.

However, the ascent of generative AI and deepfake technology casts new, complex shadows over this legal terrain. The challenges introduced by these technologies are manifold and unprecedented. While it was once straightforward to distinguish between intentional and accidental use of trademarks, the line is now increasingly blurred. For instance, when AI tools deliberately replicate trademarks to deceive or dilute a brand, it is a clear case of infringement. However, the waters are muddy when AI, through its intricate algorithms, unintentionally incorporates a trademark into its creation. Imagine an AI program designed for marketing inadvertently including a famous logo in its output. This scenario presents a dilemma where the line between infringement and innocent use becomes indistinct. The company employing the AI might not have intended to infringe on the trademark, but the end result could inadvertently imply otherwise to the consumer.

This new landscape necessitates a reevaluation of traditional legal frameworks and poses significant questions about the future of trademark law in an age where AI-generated content can replicate real brands with unnerving precision. The challenges of adapting legal strategies to this rapidly evolving digital environment are not just technical but also philosophical, calling for a deeper understanding of the interplay between AI, trademark law, and consumer perception.

- Legal Implications and Judicial Responses

The Supreme Court’s decision in Jack Daniel’s Properties, Inc. v. VIP Products LLC significantly sets a key precedent regarding fair use defenses in the age of AI. This case, focusing on a dog toy mimicking Jack Daniel’s whiskey branding, highlights the tension between trademark protection and First Amendment rights.

Although the case did not directly address AI, its principles are crucial in this field. For both trademark infringement and dilution claims, the Court narrows the boundaries of fair use, particularly in cases where an “accused infringer has used a trademark to designate the source of its own goods.” The Court also limited the scope of the noncommercial use exclusion to the dilution claims, stating that it “cannot include… every parody or humorous commentary.” This narrower scope of fair use makes it tricky for AI content users to navigate fair use defenses when parodying trademarks, where the lines between illegal use, parody, and unintentionally confusing consumers about endorsement or sponsorship may blur.

This ruling has direct repercussions for AI models generating noncommercial comedic or entertainment content featuring trademarks. Even if AI-created content is noncommercial or intended as parody, it does not automatically qualify as fair use. If such content depicts or references trademarks in a way that could falsely suggest sponsorship or source affiliation, then claiming fair use becomes extremely difficult. Essentially, the noncommercial nature of AI-created content offers little protection if it uses trademarks to imply an incorrect association or endorsement from the trademark owner.

As such, AI developers and companies must be cautious when depicting trademarks in AI-generated content, even in noncommercial or parodic contexts. The fair use protections may be significantly limited if the content falsely suggests a connection between a brand and the AI-generated work.

In this light, AI-generated content for marketing and branding requires meticulous consideration. AI Developers must ensure their AI models do not generate contents that incorrectly imply a trademark’s source or endorsement. This necessitates thorough review processes and possibly adapting algorithms to prevent any false implications of source identification. At the same time, the users of the AI technology for branding, marketing or content creation need to employ stringent review processes of how trademarks are depicted or referenced to ensure their creations do not inadvertently infringe upon trademarks or mislead consumers about the origins or endorsements of products and services. With AI’s capacity to precisely replicate trademarks, the potential for unintentional infringement and consumer deception is unprecedented.

This evolving landscape calls for a critical reevaluation of existing legal frameworks. Though robust in addressing the trademark issues of its time, the Lanham Act was conceived in an era before the emergence of digital complexities and AI advancements we currently face. The Court’s ruling in Jack Daniel’s case could influence future legislation and litigation involving AI-generated content and trademark issues. Today, we stand at a critical point where the replication of trademarks by AI algorithms challenges our traditional understanding of infringement and dilution. Addressing this requires more than mere amendments to existing laws; it calls for a holistic overhaul of legal frameworks. This evolution might involve new legislation, innovative interpretations, and an adaptive approach to defining infringement and dilution in the digital age. The challenge is not just to adapt to technological advancements but to anticipate and shape the legal landscape in a way that balances innovation with the need to protect the essence of trademarks in a rapidly changing world.

- Right of Publicity and Deepfakes

Deepfakes and similar AI fabrications pose risks to not just trademarks but also individual rights, as the right of publicity shielding personal likenesses confronts the same consent and authenticity challenges in an era of scalable deepfake identity theft. The concept of the right of publicity has gained renewed focus in the age of deepfakes, as exemplified by the unauthorized use of Tom Hanks’ likeness in a deepfake advertisement. This case serves as a potent reminder of the emerging challenges posed by deepfake technology in the realm of intellectual property rights. California Civil Code Section 3344, among others, protects individuals from the unauthorized use of their name, voice, signature, photograph, or likeness. However, deepfakes, with their capability to replicate a person’s likeness with striking accuracy, raise complex questions about consent and misuse in advertising and beyond.

Deepfakes present a formidable threat to both brand reputation and personal rights. These AI-engineered fabrications are capable of generating viral misinformation, perpetuating fraud, and inflicting damage on corporate and personal reputations alike. By blurring the lines between truth and deception, deepfakes undermine trust, dilute brand identity, and erode the foundational values upon which reputations are built. The impact of deepfakes on brand reputation is not a distant concern but a present and growing one, necessitating vigilance and proactive measures from individuals and organizations. The intricate dynamics of consumer perception, influenced by such deceptive technology, underscore the urgency for a legal discourse that encompasses both the protection of trademarks and the right of publicity in the digital age.

- Additional Legal and Industry Perspectives

While the complex questions surrounding AI, deepfakes, and trademark law form the core of this analysis, the disruptive influence of these technologies extends across sectors. The latest widespread dissemination of explicit AI-generated images of Taylor Swift serves as a stark example of the urgent need for regulatory oversight in this evolving landscape, particularly highlighting the implications for the entertainment industry. The entertainment industry, particularly Hollywood, is another sphere significantly impacted by AI advancements. The ongoing discussions, notably during the SAG-AFTRA strike, highlight the critical issues of informed consent and fair compensation for actors whose digital likenesses are used. The use of generative AI technologies, including deepfakes, in creating digital replicas of actors raises crucial questions about intellectual property rights and the ethical use of such technologies in the industry.

The legal and political landscape is also adapting to the challenges posed by AI and deepfakes. With the 2024 elections on the horizon, the Federal Election Commission is in the preliminary phases of regulating AI-generated deepfakes in political advertisements, aiming to protect voters from election disinformation. Additionally, legislative efforts such as the introduction of the No Fakes Act by a bipartisan group of senators mark significant steps toward establishing the first federal right to control one’s image and voice against the production of deepfakes, essentially the right of publicity.

Moreover, the legislative activity on AI regulation has been notable, with several states proposing and enacting laws targeting deepfakes as of June 2023. President Biden’s executive order in October 2023 further exemplifies the government’s recognition of the need for robust AI standards and security measures. However, the disparity between the rapid progression of AI technology and the comparatively sluggish governmental and legislative response is evident, as shown by the limited scope of interventions like President Biden’s executive order. This order, which includes the implementation of watermarks to identify AI-generated content, signals a move toward a more regulated AI environment, although the tools to circumvent these measures by removing watermarks are readily available. The order marks a step toward creating a more rigorous environment for AI development and deployment, but it also hints at the potential for the development of tools designed to undermine such security measures. These developments at the industry, legal, and political levels reflect the multifaceted approach required to address the complex issues arising from the intersection of AI, deepfakes, intellectual property, and personal rights.

- Ongoing Challenges and Future Direction

The ascent of generative AI and synthetic media ushers in a complex new era, posing unprecedented challenges to intellectual property protections, consumer rights, and societal trust. As deepfakes and similar fabrications become indistinguishable from reality, risks of mass deception and brand dilution intensify. Trademarks grapple with blurred lines between infringement and fair use while publicity rights wrestle with consent in an age of identity theft.

Given the potency of deepfakes in shaping narratives, the detection of such content is essential. A number of technologies have shown promise in recognizing deepfake content with high accuracy, particularly machine learning algorithms, proving effective at spotting these AI-generated videos by analyzing facial and vocal anomalies. Their application in conflict zones is crucial for mitigating the spread of misinformation and malicious propaganda.

Recent governmental initiatives signal the beginnings of a framework evolution to suit new technological realities. However, addressing systemic vulnerabilities exposed by AI’s scalable distortion of truth demands a multifaceted response. More than mere legal remedies, restoring balance requires ethical guardrails in technological development and usage norms, plus the need for public awareness.

In confronting this landscape, maintaining foundational pillars of perception and integrity remains imperative, even as inventions test traditional boundaries. Our preparedness hinges on enacting safeguards fused with values that meet this watershed moment where human judgment confronts machine creativity. With technology rapidly outpacing regulatory oversight, preventing harm from generative models remains an elusive goal. But don’t worry. I am sure AI will come up with a solution soon.